Wi-Fi-Based Linux Display Terminal

"A hardware GUI viewport for Linux with zero OS overhead."

Most portable devices today are locked-down platforms. I wanted something else: an embeddable and hackable terminal I fully control—without app stores or opaque drivers.

Table of Contents

What I’ve Actually Built

At the core of the current prototype is an STM32H7 microcontroller. It drives a 720×720 RGB666 display and connects over Wi-Fi using the NRF7002 companion chip—fully controlled by the MCU, no separate nRF core involved. The MCU has two cores: one handles networking, the other decoding. In the near future, I will move decoding to a low-power FPGA and networking to a smaller, more lightweight MCU (see Roadmap).

The device receives live GUI frames streamed from a Linux machine running a custom video encoder. The compression within a frame is close to JPEG, but instead of MJPEG I use delta-frame logic based on differences between frames. It’s simple enough for an MCU to decode. While the STM32H7 supports hardware-accelerated JPEG decoding, I’m currently not using it—too cumbersome with my encoder setup, though not impossible.

Bandwidth depends on GUI complexity—ranging from nearly nothing up to ~25 Mbit/s at the target 25 FPS. These numbers lack context, so check the videos on YouTube (and for more context check the Research page).

On the client side, decoding time per frame at 720×720 RGB888 ranges between 40 and 150 ms depending on content. For worst-case testing, I transmit RGB888 compressed data—even though the display is RGB666. Throughout the pipeline, RGB888 is maintained, and the LTDC simply ignores the two LSB bits when reading from framebuffer. I’m planning to support more displays in the future, so it makes more sense to test the worst case for now.

End-to-end latency—from host encoding to display decoding—is low enough for responsive, simple GUI use. The Linux host handles all the heavy lifting. The device just receives rendered frames over the network. It is already usable for some cases, though I’m not fully happy with the result. Check out how I will speed things up more on my Roadmap.

The Linux server runs a software encoder that takes approximately 20 ms per frame on an Intel i7-4820K CPU. It’s currently single-threaded, unoptimized, without SIMD or GPU acceleration. I’m confident I can drastically improve speed.

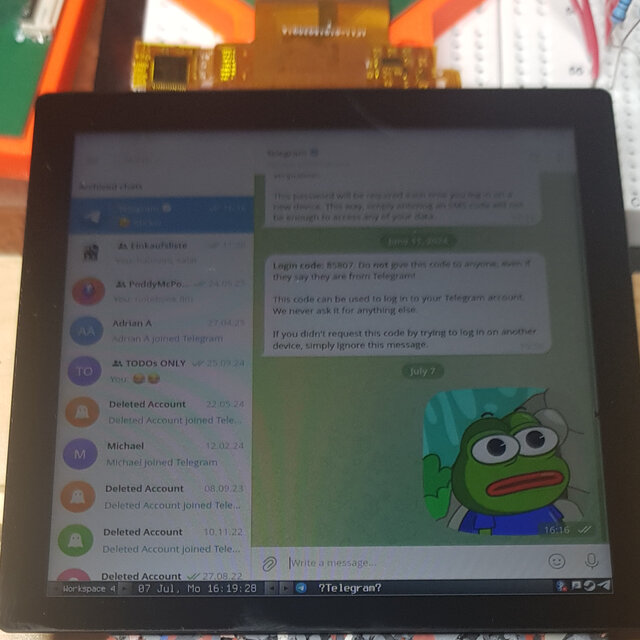

A compact, Wi-Fi-based Linux display terminal built around the STM32H7 and NRF7002.

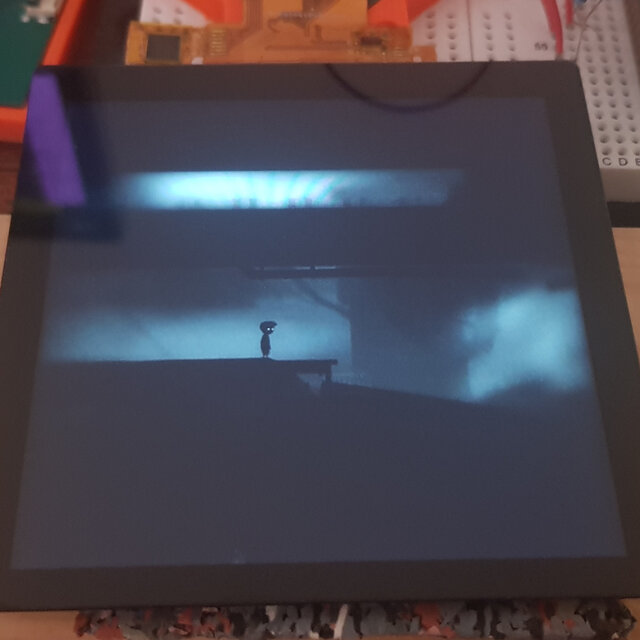

This video shows live GUI streaming over WI-FI.

Current Hardware Stack

- STM32H7 MCU

- NRF7002 Wi-Fi

- SDRAM buffer via FMC

- 720×720 RGB666 display

Current Software Stack

On the networking side, the project currently uses FreeRTOS with lwIP and wpa_supplicant. Setting up a connection is surprisingly smooth—you only need to supply a valid wpa_supplicant.conf file with your credentials. Once provided, the stack handles the entire association and authentication process cleanly and without surprises.

At this stage, a lot of the configuration is still hardcoded. That’s mainly because I’m iterating fast and prototyping features before abstracting them. Over time, I plan to move toward more dynamic configuration and better tooling.

The encoder on the Linux host is written in C++. It's a custom piece of software optimized for embedded-friendly decoding, and it streams delta-compressed GUI frames to the terminal over Wi-Fi.

Why I Built It This Way

I’ve always wanted a Linux phone or communicator. Projects like PinePhone and Librem 5 are inspiring—they bring Linux to mobile form factors and promote open hardware. But they aim to be full-featured mobile computers. That’s not my goal.

I want something more focused—minimal, and purpose-built as a GUI terminal and reusable in other projects. A screen into my Linux system, capable of displaying full desktop applications, while keeping the embedded device lightweight and efficient.

As a developer, I need a system that fits how I already work. No mobile API sandboxes, no permission dialogs. Just Qt, Python, Java, or a Flask webserver—running on a proper Linux host. Most importantly, I want full control over peripherals: input devices, sensors, actuators, and more.

Why Not Just Use VNC, RDP or X2GO?

I learned a lot—that’s what matters most to me. Beyond that, I wanted the flexibility of having my own embedded-focused codec I can adapt and modify at any time.

This isn’t just a protocol tweak. The entire pipeline—encoding, decoding, memory usage—is purpose-built for constrained environments.

Power Use and Performance Outlook

During active streaming, the device draws around 850 mW (MCU + Wi-Fi + SDRAM) and up to 1 W for the display at full brightness—totaling approximately 1.9 W.

With a standard 3.7 V, 3000 mAh lithium battery, that yields about 4–5 hours of continuous operation. Lower brightness or idle usage will extend runtime.

Planned improvements:

- Offloading the codec to an FPGA to reduce latency and power (see Roadmap)

- Support for multiple displays

The Modular Future

This project is not just about a display—it’s a modular system meant to adapt to different embedded environments. The design enables:

- Pixel-level framebuffer sideloading over SPI or parallel buses

- Display bridges via FPGA bitstreams that support different interfaces and panel types

- Optional local rendering for overlays and UI elements, even without network

The idea is that you always have a fallback: the main streaming path ensures a working GUI, even in minimal setups. But over time, you can enrich your system—by sideloading optimized UI blocks, adding local overlays, or routing through custom display bridges. Each layer can be upgraded independently, while the base functionality remains usable throughout.

The goal is a lightweight, reusable system that can act as a general-purpose GUI output layer—fully controllable and adaptable across use cases.

Target Audience

This project is intended for people who work with embedded systems and want more flexible or streamlined access to Linux GUIs:

- Embedded developers working with headless boards and constrained environments

- System integrators looking for display solutions without heavy OS dependencies

- FPGA and microcontroller enthusiasts interested in lightweight graphics pipelines

- Anyone who wants more control over how displays integrate into their Linux-based workflows

Why I'm Sharing This Now

I want to see if this idea resonates. If you're a builder, system developer, or embedded hacker and this excites you, I want your feedback.

Leave a comment on Hackaday, or PM me via Reddit or BlueSky. The concept is proven—I’m now focused on turning it into something real and optimized.

About Open Source and Access

The project is intended to be fully open—hardware, firmware, protocol. But I’m not dumping half-documented prototype code into the wild.

Right now, the software stack is volatile, evolving, and carries technical debt. Releasing it prematurely would lead to confusion and fragmentation before the core is even stable.

Once the foundation is documented and solid, everything will be released under open licenses. That’s the goal.

Interested?

This is a real project. Boards are running. Displays are rendering. Frames are streaming. If you wanna support me, follow me and get updates. If I find enough like-minded people I'm gonna sell these boards.

Want to give feedback? Find the discussion thread on Hackaday or write me a PM via Reddit or BlueSky.

— Gitzi